Fast, correct SQL matters now. Cloud and on-prem systems both face higher costs and slower dashboards when poorly written statements inflate compute and I/O. Good design cuts latency, reduces bills, and keeps users productive.

This short guide focuses on practical techniques you can apply today. Expect clear advice on indexing, avoiding SELECT *, smarter JOINs, and staging logic with CTEs. We also cover pagination, TOP, and when stored procedures help reduce network chatter.

Use execution plans and missing-index DMVs as signals, not mandates. Watch rows scanned versus returned and tempdb spills, and avoid over-indexing that hurts writes.

By the end you’ll get a prioritized checklist to improve runtime and lower resource use for your heaviest workloads. Read on for targeted tips and examples that deliver measurable gains.

Key Takeaways

- Return only needed columns; avoid SELECT * to cut I/O.

- Favor joins on keys and replace expensive ORs with UNION ALL when possible.

- Use plans and DMVs to guide changes, and avoid over-indexing.

- Stage logic with CTEs and use TOP or pagination to limit transferred data.

- Encapsulate repeatable work in stored procedures for plan reuse and fewer round trips.

Understand the intent: target faster, cheaper, and correct SQL

Begin with clear success criteria: correctness first, then reduce runtime and resource use to lower costs in cloud and on-prem platforms.

Align each statement with business needs. Return only the columns and rows required. Pick filters and join patterns that reflect data distribution and cardinality.

Prefer faster constructs like UNION ALL when deduplication is unnecessary, but default to UNION or outer joins when correctness demands it. Filter early and project narrow columns so the optimizer can use tighter access paths and fewer intermediate rows.

- Define success: correct results, then minimal runtime and scanned data.

- Weigh trade-offs: covering columns speed reads but may slow writes; match design to read vs. write loads.

- Test patterns: EXISTS can outperform IN for large subqueries; refactor OR-heavy logic into unions when needed.

Use execution plans and measured metrics—duration, rows read vs. returned—to guide changes. Iterate with evidence so behavior becomes predictable across platforms and data types.

Indexing that moves the needle

The right index can turn a full table scan into a few rapid lookups and big IO savings. Choose a clustered key that supports the most common ranges or primary lookups, for example order_date on a fact table. All tables benefit from a clustered index and a primary key to stabilize row location and help the optimizer.

Choose clustered, nonclustered, and composite wisely

Clustered indexes store rows in key order and favor range scans. Nonclustered indexes point to base rows and speed selective predicates.

For composite indexes, put the most selective and consistently filtered column first. Remember the left-to-right effect: leading columns must match filters to avoid scans.

Covering indexes vs. INCLUDE columns

INCLUDE columns can eliminate key lookups and cut page reads for hot queries. Use them for critical paths only and measure the write amplification they add.

Avoid over-indexing and act on hints pragmatically

Each extra index increases cost on INSERT/UPDATE/DELETE and consumes storage. Treat missing index suggestions from execution plan XML and DMVs as starting points. Compare against existing indexes and fold or trim suggestions rather than creating many new ones.

- Watch symptoms: wide scans, high logical reads, and frequent key lookups point to under-indexing.

- Review often: remove unused indexes and consolidate overlaps to lower maintenance operations.

- Text search: prefer full-text indexing for heavy free-text needs instead of leading-wildcard LIKE scans.

Stop using SELECT * and return only the columns you need

Selecting only needed fields cuts scanned bytes and speeds result delivery. SELECT * pulls every column, forcing the engine to read and transmit extra data. This increases memory grants, expands network payloads, and raises the chance of tempdb spills on large operations.

Column-level selectivity reduces data scanned and network I/O

Explicitly list the columns your application needs. Narrow projection helps nonclustered indexes cover more data and reduces key lookups that inflate logical reads.

In cloud warehouses, scanned bytes often map to cost. Returning only a few fields instead of the entire table lowers bills and improves runtime. In a typical customers table, prefer customer_name, customer_email, customer_phone over SELECT *.

- SELECT * forces reads of unused columns, growing I/O and network time.

- Projecting fields enables narrower indexes and fewer key lookups, improving overall performance.

- Use views or stored procedures that expose specific fields per use case to prevent accidental SELECT * from client code.

- Apply linters and code-review checks to flag SELECT * and refactor into named lists; compare estimated and actual row sizes after changes.

Also note: SELECT * can break downstream consumers when schemas change and can hide fields that should be removed. Return only what you need to keep the database lean and predictable.

Write smarter JOINs and avoid performance-killing OR predicates

Good join design prevents needless row explosion and keeps runtimes small. Default to INNER JOIN to return only matching rows. Use LEFT JOIN only when you must keep all left-side rows; avoid RIGHT JOIN for readability.

Join on keys. Prefer primary/foreign key pairs so the engine can use an index and avoid multiplicative results. When business logic requires multiple equalities, use composite keys and align composite index order with the most selective column first.

Replace OR across columns with UNION patterns

OR across columns or tables forces the engine to evaluate each leg and often inflates reads and CPU. In one example joining Production.Product and Sales.SalesOrderDetail on ProductID OR rowguid, reads jumped to ~1.2M.

Rewrite each OR branch as its own SELECT and combine with UNION or UNION ALL. The rewrite cut reads to ~750 and dropped execution time to under one second in that example.

- Advice: Use UNION ALL when deduplication is not needed for the best performance.

- Check plans: Inspect execution plan shapes—nested loops, hash, or merge joins—and tune indexes to enable the most efficient physical join.

- Validate results: After refactoring, confirm row counts and duplicates with COUNT DISTINCT or EXCEPT checks to keep correctness intact.

EXISTS, IN, OR, and UNION ALL: pick the right filter pattern

Filter design matters: the same business intent can produce very different execution costs.

EXISTS shines for large correlated subqueries. It stops on the first match, so the engine often builds a leaner plan and reads fewer pages.

Use IN for small, static lists or precomputed sets. Large, changing lists can make IN slower than an EXISTS-based rewrite.

When to choose each pattern

- EXISTS: membership checks against big tables; short-circuits on first qualifying row and reduces work.

- IN: small, fixed lists or tiny result sets from subqueries; simpler but can cost more with large data.

- OR: easy to write but often forces multiple scans; consider separate selects combined with UNION ALL instead.

- UNION vs UNION ALL: use UNION only when you must remove duplicates. UNION ALL is faster because it skips deduplication.

Example patterns to test:

- WHERE EXISTS (SELECT 1 FROM orders o WHERE o.customer_id = c.customer_id)

- WHERE customer_id IN (SELECT customer_id FROM orders)

Validate changes with execution plans. Look for semi-join operators, fewer sorts, and lower I/O after switching to EXISTS or UNION ALL. That evidence keeps correctness and performance aligned.

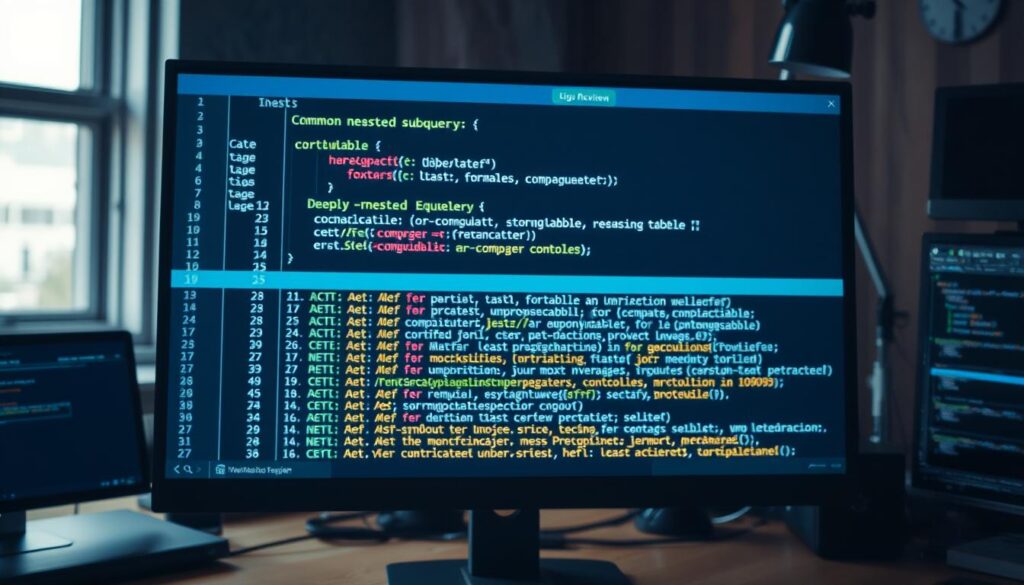

CTEs over deeply nested subqueries for clarity and maintainability

Break complex selects into named steps so each transformation is easy to test and reason about. Common table expressions (CTEs) act as readable scratchpads. They let you split big statements into small, labeled pieces that match business intent.

Use CTEs to stage logic and validate intermediate rows. Select from each CTE to check counts and key distributions before composing the final result. This makes debugging faster and reduces regressions in reports.

CTEs can sometimes help the optimizer see cardinality and join opportunities more clearly. That may improve execution plans, but you must measure plans before and after to know for sure.

- Name CTEs by business meaning (for example recent_orders_30d) so reviews focus on intent.

- Isolate transformations to validate intermediate results and keep statements short.

- Test incrementally: SELECT from each CTE to confirm distributions and keys.

Tip: Avoid excessive chaining that hides where predicates belong. Push selective filters into the earliest CTE stages to preserve performance and clean design.

Retrieve less data: LIMIT/TOP, pagination, and scoped date ranges

Limit returned records early to keep interactive pages snappy and reduce backend load. Use TOP or LIMIT with a clear ORDER BY (for example, TOP 100 by most recent signup) so the first rows shown are stable and meaningful.

Pagination with OFFSET or a keyset pattern keeps clients responsive. Return only visible rows to cut memory and network transfer. Default filters, such as the last 30 days, make dashboards fast while still letting users widen the window when needed.

- Keep interactive pages fast: use TOP and pagination to return a small page of rows and reduce perceived time-to-first-row.

- Make results deterministic: pair TOP with a stable ORDER so users don’t see jumps between pages.

- Scope by date: default to recent date ranges to lower scanned data and overall load on the database.

- Trim payloads: reduce returned columns and pre-aggregate when possible to shrink the data sent to clients.

- Materialize smartly: use temp tables to store expensive subresults reused across queries, but clean them up to avoid table bloat and contention.

Some platforms cache repeated queries; structure predictable, parameterized statements to benefit from that while meeting freshness needs. These small changes together yield measurable gains in query performance and user experience.

Use stored procedures for repeatable, parameterized operations

Encapsulate repeatable work in stored procedures so applications send fewer statements and get more consistent performance.

Procedures wrap complex logic into reusable blocks, reduce app-to-database roundtrips, and often benefit from precompilation and plan reuse. Parameters enable dynamic filtering—for example: EXEC find_most_recent_customer @store_id=1188.

Use them for reporting endpoints, recurring ETL steps, and multi-step operations that need transaction scoping. Strongly typed parameters and input validation lead to stable selectivity and fewer plan surprises.

Cut network chatter and leverage precompilation

- Centralize statements: keep business logic in procedures to minimize chatty calls and encourage plan reuse for consistent latency.

- Parameterize: avoid string concatenation. Parameters are secure and reduce parsing overhead for repeated queries.

- Usage patterns: reporting, scheduled ETL, and complex transactions benefit most from routines.

- Observability: capture row counts and durations inside procedures to monitor execution and troubleshoot in production.

- Maintainability: avoid deep nesting and excessive business logic inside procedures; balance complexity with testability.

Schema and storage strategies: normalization, denormalization, and partitioning

A balanced model that mixes normalized entities and targeted denormalization keeps both integrity and speed.

Normalize core entities such as customers and products to at least 3NF so the database enforces correctness and reduces anomalies.

Normalize core entities; denormalize analytics paths thoughtfully

Keep authoritative tables normalized for safe updates and clean relationships. This preserves single sources of truth for key values like customer_id.

Denormalize read-heavy analytic paths where frequent reporting causes many joins. Duplicate a few columns into reporting tables to avoid expensive join chains.

Partition large tables; shard when horizontal scale is the bottleneck

Partition big tables by date or a logical key so queries prune irrelevant ranges and reduce I/O. Maintenance tasks like index rebuilds become faster per partition.

Sharding moves whole table ranges to separate nodes when a single instance cannot handle load. Plan sharding only when vertical scaling and partitioning no longer meet throughput needs.

Choose appropriate data types to speed comparisons and aggregates

Right-size column types: prefer integers and narrow numeric types for joins and aggregations. Smaller types save storage, CPU, and cache pressure.

Batch large updates and backfills to limit locks, transaction log growth, and blocking during heavy write operations. Test co-location and index strategies first—partitioning and sharding can complicate joins and indexes.

- Hybrid modeling: normalize authoritative entities and denormalize analytic tables for performance.

- Partitioning: enables pruning, faster maintenance, and easier archiving.

- Sharding: use only when horizontal capacity is the constraint across nodes.

- Types and columns: choose narrow types and exact values to shrink storage and speed operations.

Monitor and tune: execution plans, DMVs, and performance tools

Collect and trend execution metrics so small regressions don’t become production outages. Track duration and compare rows scanned versus returned. Watch for disk spills and out-of-memory errors that force rewrites or schema changes.

Use the platform tools you have. SQL Server exposes plan XML and missing-index DMVs; capture those suggestions and trend them. Cloud providers offer native diagnostics—BigQuery execution details, Snowflake Query Profiler, and Redshift visualizers—that reveal hot spots fast.

- Review plans regularly: spot scans, key lookups, tempdb spills, and heavy sorts that hurt performance.

- Track runtime metrics: elapsed time, CPU, logical reads, and rows read vs. returned to shrink read-to-return ratios.

- Prioritize with DMVs: collect missing-index advice and expensive statements so changes yield the biggest wins.

- Watch plan complexity: many joined tables expand optimizer search space and often produce suboptimal plans under time pressure.

Create feedback loops between developers and DBAs. Include plan checks in code review, feed production telemetry into index and model management, and use platform-native tools to guide tuning work for reliable operations.

How to optimize query sql server today: a practical, prioritized checklist

How to act today: target high-cost statements with small experiments that change projection, index choices, or joins one at a time.

Start with projection: replace SELECT * with explicit columns to cut I/O and network time. Then run before/after tests to measure gains.

Examine execution plans and DMVs, add or adjust nonclustered indexes for hot predicates, and use INCLUDE columns only when lookups remain a bottleneck.

Refactor OR across fields into separate SELECTs combined by UNION ALL when duplicates aren’t a concern. Prefer EXISTS for large membership checks.

Limit returned rows with TOP, stable ORDER BY, and keyset pagination. Encapsulate repeatable work in stored procedures and centralize filters.

Partition big tables, monitor rows scanned vs. returned and tempdb spills, and iterate with benchmarks. Keep a short backlog of candidate index changes and prune overlaps regularly for sustained performance wins.